Intel’s Foundry Day Focuses on Advanced Packaging

Intel’s Foundry Day Focuses on Advanced Packaging

By Jean S. Bozman

Intel Corp. made significant announcements in its diversification strategy on Feb. 21, 2024, showing the ways in which its Intel Foundry business will partner with semiconductor companies, design-software companies, and new customers to deliver advanced packaging for next-generation compute and networking processors.

In its Direct Connect event, a series of announcements surrounded the Intel Foundry announcement and newly announced partnerships, rounding a full day of Intel Foundry news. One of the day’s key messages is that Intel will provide a stable, secure, and consistent supply chain for a wide variety of microprocessors for data centers, networking, and consumer uses.

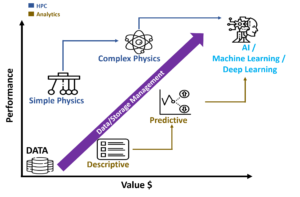

Central to this story is the idea that AI will drive a new era of compute, requiring heterogeneous compute engines co-resident on microprocessors; function-specific chiplets on microprocessors; and a new wave of software design tools to enable AI workloads on next-generation chips.

The rapid adoption of AI, including generative AI (e.g., ChatGPT) and operational AI to manage data and applications, is driving substantial growth in the semiconductor segment – giving Intel the opportunity to grow revenues and profits more quickly than during the pandemic years (2020-2023).

Things Are Changing in the World’s Data Centers

This emerging world of heterogeneity will require fast engines – and fast interconnects between them, along with customer adoption of networking and interconnect standards and the use of flexible EDA chip-design software from Synopsys, Siemens, Cadence, and others.

As a result, the deployment of next-gen systems will likely be very different than it has been in the 1990s, 2000s and 2010s. Intel envisions a world of AI-enabled compute engines that will be housed within a series of very dense racks – with new power/cooling technologies to prevent overheating for a world of AI clusters and support sustainability.

This vision fits with IDC’s view that net-new IT adoption will happen in cloud provider data centers about as much as it does in enterprise data centers, resulting in a 50/50 mix of new system deployments worldwide. In that world, distributed computing is based on a mix of onboard capabilities rather than traditional monolithic designs.

Further, the compute engines themselves will be deployed within enterprise data centers and large cloud provider (CSP) data centers located around the globe – making secure and consistent supply chains vital to the next generation of AI deployments around the world. That was the “mantra” of the Intel Foundry announcements on Feb. 21.

Geographic Considerations

The current distribution of large fabs and foundries around the world is largely centered in Asia – in China and Taiwan, Korea, and Southeast Asia. Intel is currently constructing new Foundry locations in western Europe and North America – places that have lots of microprocessor customers, and relatively fewer foundries nearby.

Today, the chips that are made in Intel’s fabs are used primarily by Intel, but the company hopes to shift to a partner model, in which Intel customers use EDA software to design their new chips, and Intel engineers make those new designs – doing so with advanced packaging, high quality and shortened time-to-markets.

Clearly, Intel is not alone in building new fabs, but it is planning and constructing fabs that will bring more foundries closer to the vendors and large enterprises that consume and deploy microprocessor chips. Sites either planned or under construction include fabs in Germany, Ireland, and Poland, as well as U.S.-based fabs in Oregon, New Mexico, and Arizona.

Intel Foundry Event Highlights

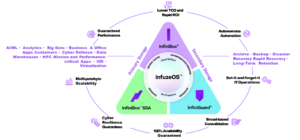

The daylong Intel Foundry conference spelled out the types of advanced packaging that will be required for microprocessors made by Intel (CORE and Xeon), and by its longtime competitors – some of whom could become customers of the new Intel Foundry business. Examples of advanced packaging include multiple function-specific chiplets linked by fast interconnects; “tiles” to package the components into 2-D and 3-D arrays; memory pooling, and low-power energy sources.

The Intel Foundry Business

To set the tone for the day-long event, Intel renamed its Intel Foundries Services (IFS) business, which has design and manufacturing centers in the western U.S., Europe, and Asia. It is now named Intel Foundry – and is open for partnership with other tech companies that will design, but not manufacture, the future microprocessors and chiplets they design.

One of the day’s key messages is that Intel will provide a stable, secure, and consistent supply chain for a wide variety of microprocessors for data-centers, networking and consumer uses.

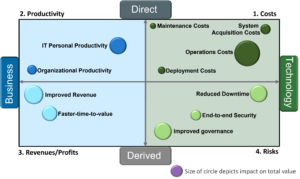

Now, the Intel Foundry business can be viewed as wafer-fabrication, advanced packaging and manufacturing resource for customers who design their own chips – while supporting a worldwide supply chain that has “hubs” across multiple continents. That contrasts with the oft-quoted global sites for foundries and fabs – which today shows that about 80% of semiconductor manufacturing is in Asia, and only 20% in the combination of U.S. and Europe, Intel said. Intel plans to close the gap – moving to a 50-50 mix of distributed and central-site IT expertise.

The phenomenon of co-opetition (cooperating and competing over time) will likely be an element of the world’s foundry opportunities for partnering; it already is. We’ve seen that pattern with other companies in the foundry business: Global Foundries, and with Samsung. Global Foundries operates fabs in upstate New York and Singapore – and Samsung operates fabs in Austin, Texas, and South Korea.

As Intel grows its business, the Intel Foundry sites may choose to partner with other longtime semiconductor companies, earning new foundry business by providing advanced packaging, design, and manufacturing expertise. In this way, companies that may formerly have been seen as Intel competitors may become foundry partners. One example, announced at the event, is that Intel will partner with ARM to serve mutual foundry customers.

Software-based design will be key to this new era of Intel Foundry business. That’s why Intel announced big design-software partnerships with Synopsys, Siemens (Mentor Graphics), and Cadence at this conference – and why all three of these companies have their former CEOs on Intel Foundry’s new Advisory Board.

Worldwide, the largest foundry business belongs to TSMC, with multiple fabs in Taiwan, and at last one that is under construction in Arizona. TSMC forged a model of fabricating chips that other companies – its customers – designed for themselves, or designed with the help of third-party firms.

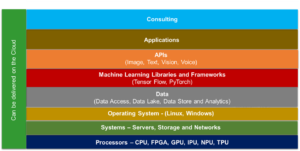

The Foundry Business Model

Establishing and delivering the advanced manufacturing techniques requires agreed-upon standards, adoption of interoperating interconnects between chips, high-speed networking – and the arrival of net-new technologies, such as AI-enabled consumer devices for the Edge, and what Intel has announced as its AI PC for business desktops.

Other elements needed for an increasingly disaggregated, AI-enabled computing world include security standards that isolate and protect “known-good” IT platforms and security-oriented “guardrails.”

On-chip interoperability between the individual compute engines, including chiplets, will be needed to harness as many compute and networking links as possible. Given the changing “physics” for manufacturing with new materials, optical interconnects, glass substrates and links like UCIe and UltraEthernet – all are expected to accelerate the adoption of advanced packaging for Enterprise, Cloud and Edge systems.

Intel’s strategy is to leverage its multi-billion-dollar investments – including its own and funding from the U.S. federal CHIPS act to build out an arc of U.S. and European fabs. This will support local regional markets – and provide a de facto “follow-the-sun” manufacturing cycle around the world. At the Feb. 21 event, U.S. Secretary of Commerce Gina Raimondo, who spoke via video, outlined the series of CHIPS-program grants that Intel is leveraging as it builds two new Ohio manufacturing plants currently under construction.

Partnerships will be key in growing the business – including partnerships with design-software providers and semiconductor vendors. In a prime example of Intel Foundry’s emerging business model, Intel and ARM announced a new partnership for next-gen platform manufacturing. This was one of the most surprising, and closely guarded, secrets prior to the February launch event. In past years, industry observers saw ARM as competing with Intel in the mobile-phone and consumer markets in which ARM designs were made by other foundries; this perception will likely shift, given the Intel-ARM partnership announcement.

The Importance of AI

AI – and its rapid growth, were a constant theme throughout Intel’s messages about its product and technologies strategies. The reason is plain to see: The deep interest in AI, spurred by the emergence of ChatGPT in November, 2022, is driving remarkable IT growth following the worldwide pandemic of 2020 – 2022.

Interestingly, both Microsoft and OpenAI participated in the Intel Foundry Direct Connect event. Specifically, Microsoft CEO Satya Nadella provided a video for the keynote with Intel CEO Pat Gelsinger – and Open AI CEO Sam Altman provided his outlook for AI’s future in a closing 1:1 onstage discussion with Intel CEO Gelsinger.

In the keynote session, Microsoft CEO Nadella announced that Intel Foundry would be producing a processor that will be optimized for next-generation office software. The plan was specific enough to name the microprocessor – and the foundry process by which it will be manufactured. Microsoft’s big market reach will illustrate how the Foundry business model works.

The presence of both Microsoft and OpenAI may be doubly significant, in that Microsoft has already made substantial investments in OpenAI. Further, a potential $13 billion acquisition of OpenAI by Microsoft was widely discussed last year, prior to Sam Altman’s decision – within weeks — to remain in his role as OpenAI CEO.

Altman is known for his focus on the importance of AI research alongside building a product roadmap and a company. In his onstage conversation with Pat Gelsinger, at the close of the daylong Intel Foundry event, Sam Altman said he continues to believe in the promising future of AI – especially when it can be used to accelerate progress in important fields like human cognition, health care, medical research, and education.

Altman, who is known for his focus on the importance of AI research alongside building his company’s product roadmap and business plan, closed with this thought about the importance, and the future, of AI. “There are mega-new discoveries to find in front of us,” Altman said on-stage. “But the fundamentals of this, is [that] deep learning works and it gets predictably better with scale.”

Copyright ©Jean S. Bozman, 2024, All rights reserved.